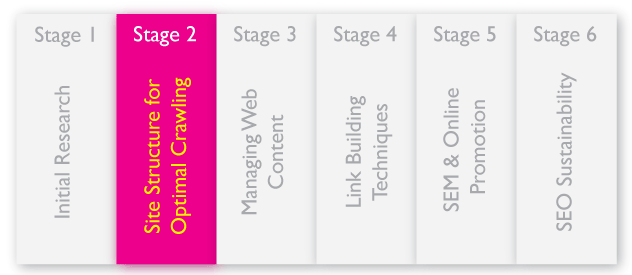

The previous article within this collection focused upon the importance of laying the correct foundations for a successful SEO Campaign. The backbone of any SEO campaign takes the form of keyword selection and deployment.

To fully understand the workings of a Search Engine Optimisation campaign, one must focus on the working processes all the major search engines implement.

To cache all the information available, search engines use what are known as ‘spiders‘or ‘bots’, these crawl the internet and record all websites and links.

Website Crawling and Site Structure

The more simple your site structure, the easier spiders will navigate your site and as a result, catalogue more of your content and links. By insuring the correct implementation of site structure, file names and file extensions you will make sure your website is looked upon favourably by the search engines.

There are a number of useful SEO tools available free online that will determine how accessible your site structure is for spiders. Lemonwords will give a view of each webpage from the eyes of a robotic spider.

This is useful for determining if your site is too complex to be crawled properly.

The following issues should be flagged up as they may affect how efficiently your website is crawled:

• Avoid tabular format websites

• Steer clear of using JavaScript for Links

• Spiders still have difficulty crawling flash sites. The situation is slowly improving but best to show caution for the time being.

• Links within PDF and PowerPoint documents are regularly missed and carry less weight.

• Pages with too many links will not get crawled properly. No more than 100 per page.

• Links within forms can be hard to crawl.

Page Indexing

The more pages a search engine indexes from a website the better. More pages mean more chances that your website will appear within the SERP (Search Engine Results Pages). The key to ensuring a healthy index rate is to take advantage sitemaps and robot.txt. These make it easier for your website to be crawled and therefore indexed. Try using the Google Sitemap Generator which works on both Linux and Windows servers. This will automatically update your sitemap after you have made any changes to your website; ensuring new content is cached by the search engines.

The next stage within this SEO Development collection will look at preparing your webpages for keyword rich content. This stage will look at the benefits of great copywriting and correctly managing all your online assets.

No comments:

Post a Comment